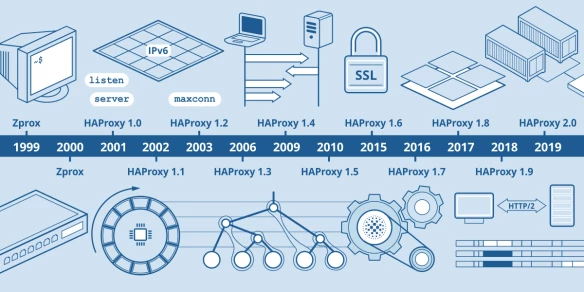

HAProxy Forwards Over 2 Million HTTP Requests per Second on a Single Arm-based AWS Graviton2 Instance

First ever software load balancer exceeds 2 million RPS on a single Arm instance! We're near an era where you get the world’s fastest load balancer for free.